This is part 2 of a three part series about integrating R and PowerPivot.

- Part 1 – Intro (includes links to necessary components)

- Part 2 – Creating a classification model in R

- Part 3 – Evaluating and Scoring R models in Predixion

In this installment, I’m going to walk through the process of creating a predictive model in R, exporting the model as PMML and importing the model into Predixion Insight. The demo requires packages from Togaware to create models and export them– you can go to the Togaware website and follow their instructions, or you can just launch the R console and enter:

> install.packages("RGtk2")

> install.packages("rattle", dependencies=TRUE)

Now, you may be prompted to install additional packages and you may have to restart R after the library installation is complete – it’s probably a safe bet in any case.

After you’ve installed R and the correct libraries and are in the correct state of R preparedness, you can load the necessary libraries into R using these commands:

> library(rpart)

> library(pmml)

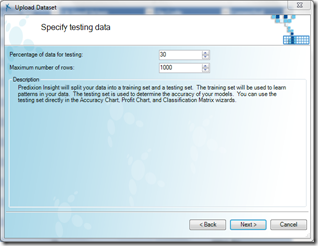

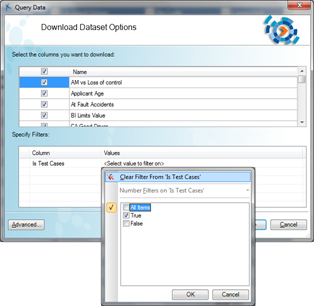

The next step is to load the training dataset into R. As preparation for this article, I created a model (the same model as in our demo video) and used the technique in my previous post (for those who already read that, this was the point) to export the training set and saved the result as a .csv file. I’ve put the training data file up on my SkyDrive if you just want to grab it.

This line reads the.csv file and puts the result into an object called “insurance”. Note that R, due to it’s UNIX origins, uses forward slashes instead of backslashes as directory separators – also it’s case sensitive!

> insurance <- read.csv("Data/Insurance Demo/insurance.train.csv")

Next, I need to create a predictive model. The rpart command creates a tree model on a dataset given a target column. There are many additional parameters controlling how the tree is created, but for the purposes of this blog post, I’m just providing the shortest possible command. This line creates a tree model and places it into an object called “TreeModel” that we can use later

> TreeModel <- rpart(Converted~.,insurance)

The pmml command converts the tree model to PMML, assigning the result to “TreePmml”.

> TreePmml<-pmml(TreeModel)

Finally, use write to output the pmml into a file – note again the forward slashes in the file path!

> write(toString(TreePmml),"Data/Insurance Demo/RModel.pmml")

This creates the file “RModel.pmml” that, if you’re curious, you can open up and examine in notepad or any other text editor. Inspecting the text you’ll see that PMML is simply an XML representation of the patterns that were learned by the R algorithm – if you search for the word “node” you will find the structure of the tree itself.

Now that I have a model in PMML, I can launch Excel with Predixion Insight for Excel installed, switch to the Insight Analytics ribbon and click the My Stuff button. In the My Stuff dialog, I need to click “Import PMML” to load the R model. Note that PMML support is available in the Predixion Insight Free Trial and the Predixion Insight with PMML products.

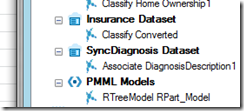

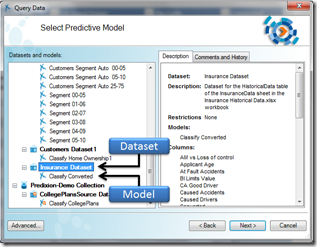

After I click Import PMML and select and import my R model, it appears in the list of datasets and models under a special PMML node in all dialogs where you select a model, such as the My Stuff dialog or the accuracy chart and query wizards. For most purposes PMML models are treated the same as native Predixion models in that you can import and export them, share them with other users, and publish them to shared collections.

In the next installment, I will show you how to use the R model in Predixion to generate accuracy charts, and, as promised, score data in PowerPivot!