We just launched a new, incremental version of Predixion Insight with a whole bucket load of new features – some subtle, some more obvious and in your face. Actually – many you shouldn’t even notice – just have a feeling of the product being “better” all around.

In this posting I’ll just give a quick overview of some of the new additions and later I’ll get around to providing details on all the goodies. It’s better if you just try them our for yourself anyway – if you are a current user, just log in and you’ll be prompted to update, and if not what are you waiting for? (One great new “feature” is that we’ve extended the free trial to 30-days, to give you ample time to check everything out!)

So I’m going to tell you about new features in my trademark stream of consciousness – no particular order kind of way.

Normalization

The Normalization allows you to normalize data in Excel or PowerPivot by Z-Score, Min-Max, or Logarithm. It’s actually really cool because you can normalize all the data at the same time instead of column per column. Also, if you’re normalizing PowerPivot data, you can conditionally normalize so you can get Z-scores by group. Way cool.

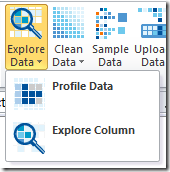

Explore Data

We added additional data exploration options so the “Explore Data” button became the “Explore Data” menu and the previous “Explore Data Wizard” became the “Explore Column Wizard”.

Profile Data provides descriptive statistics about your Excel or PowerPivot data. I won’t say too much about it here since Bogdan already wrote up an excellent post just on this feature!

Also, Explore Column has an added button to allow you to look at your data (and bin it!) in log space. All your data scrunched up on the side? Click the “log” button and see it spread out with a nicer, friendlier distribution. This button also shows up in the Clean Data/Outliers Wizard as well.

Getting Started

I guess I really should have put this one first, despite this being stream of consciousness. Anyway, noting that customers were having a hard time finding our sample data, help and our support forums, among other things, we added a handy (and pretty) Getting Started page.

PMML Support

In the Manage My Stuff dialog you can import models from SAS, SPSS, R, and any other PMML source. You can then use all of the model validation tools and the query wizard to score and validate against data in Excel and PowerPivot. Way to leverage your extend investment in predictive analytics tools to the Excel BI user base!

Insight Index and Insight Log

The Insight Index is an automatically created guide to all of the predictive insights and results you create with Predixion Insight. This guide provides descriptions of every generated report along with links to each report worksheet that are automatically updated when you rename worksheets and change report titles. The Insight Log automatically maintains a trace of all Predixion Insight operations that generate Visual Macros. These operations can be re-executed individually or copied to additional worksheets to easily create custom predictive workflows. Both of these features can be turned on or off in the options dialog.

VBA Connexion

Predixion Insight now supports VBA programming interfaces allowing you to create custom predictive applications inside Excel. It’s like super hard – for example, look at this excerpt I wrote for a demo that applies new data to an existing time series model so you can forecast off of a short series:

Oh, wait – it’s not hard – it’s easy!

Visual Macros

While we’re on the topic of Visual Macros, I should mention that Visual Macros now support all of the Insight Now tasks as well as the Insight Analytics tasks. This means that you can easily encapsulate any operation we perform on the server in a macro and use it to string together your own workflows.

Other stuff working better

There are always little things here and there that are better as well that you don’t notice until you get there – the Query Wizard is cleaner and easier to use. Exceptions – even ones caused by data entry – give you an immediate way to provide feedback directly to the dev team. And I can’t even begin to talk about how much better our website is! (Mostly because I’m out of time to write this post – HA!)

Really – try it out and let me know what you think. If you’ve tried it before go check out what’s new – if not, now is a wonderful time to do so, it’s lookin’ pretty good.

l client (Predixion Insight for Excel) that has absolutely no infrastructure or procurement friction and works with Excel 2007 and botht he 32-bit and 64-bit versions on Microsoft Excel. Oh – and it’s also directly integrated with Microsoft’s new PowerPivot offering allowing powerful analytics, business modeling and now, predictive analytics, right in the Excel working environment.

l client (Predixion Insight for Excel) that has absolutely no infrastructure or procurement friction and works with Excel 2007 and botht he 32-bit and 64-bit versions on Microsoft Excel. Oh – and it’s also directly integrated with Microsoft’s new PowerPivot offering allowing powerful analytics, business modeling and now, predictive analytics, right in the Excel working environment.

was one of those moments where you’ve pushed so hard running on adrenaline that when you’ve reached that summit you collapse because you can finally sleep a good sleep – if only for a moment. With the beta launch we have people from around the world enjoying predictive analytics in the cloud via

was one of those moments where you’ve pushed so hard running on adrenaline that when you’ve reached that summit you collapse because you can finally sleep a good sleep – if only for a moment. With the beta launch we have people from around the world enjoying predictive analytics in the cloud via  cloud while simultaneously they are automatically provisioned across an array of servers. The data shuttled seamlessly and invisibly between tasks on their behalf being dissected and analyzed before being dropped into a predictive report right back on their desktop.

cloud while simultaneously they are automatically provisioned across an array of servers. The data shuttled seamlessly and invisibly between tasks on their behalf being dissected and analyzed before being dropped into a predictive report right back on their desktop.  easy-to-use predictive analytics without having to jump through hoops for procurement, acquisition, installation, management, etc. etc. etc. By creating an Excel-native, subscription-based predictive service, we’re taking the traditional barriers barring people from even opening the door to predictive analytics and slashing them to the ground.

easy-to-use predictive analytics without having to jump through hoops for procurement, acquisition, installation, management, etc. etc. etc. By creating an Excel-native, subscription-based predictive service, we’re taking the traditional barriers barring people from even opening the door to predictive analytics and slashing them to the ground.  a development team of only 5 people we’ve created, what I think, is a truly disruptive entry in the predictive analytics space, and we’re just getting started.

a development team of only 5 people we’ve created, what I think, is a truly disruptive entry in the predictive analytics space, and we’re just getting started.